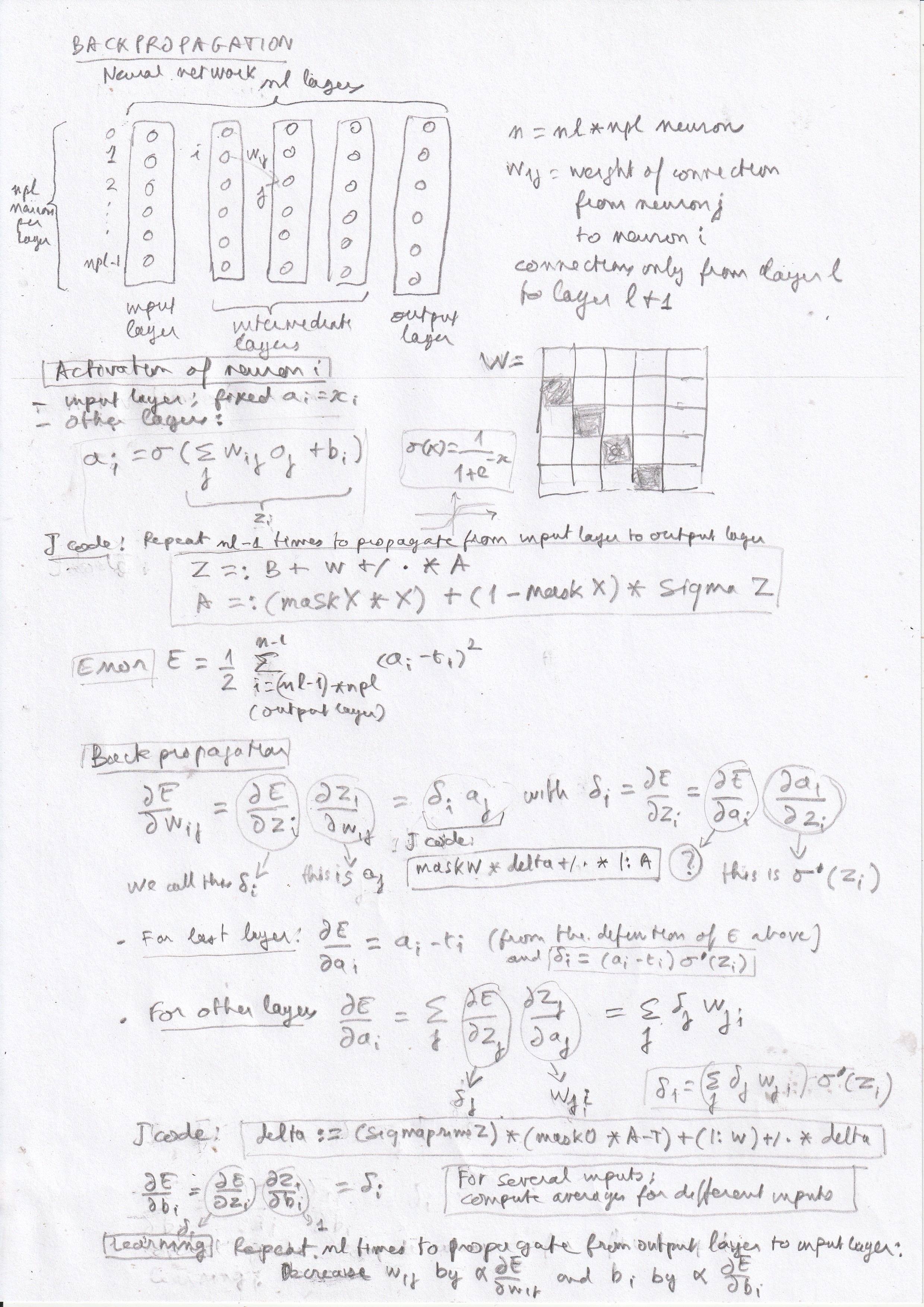

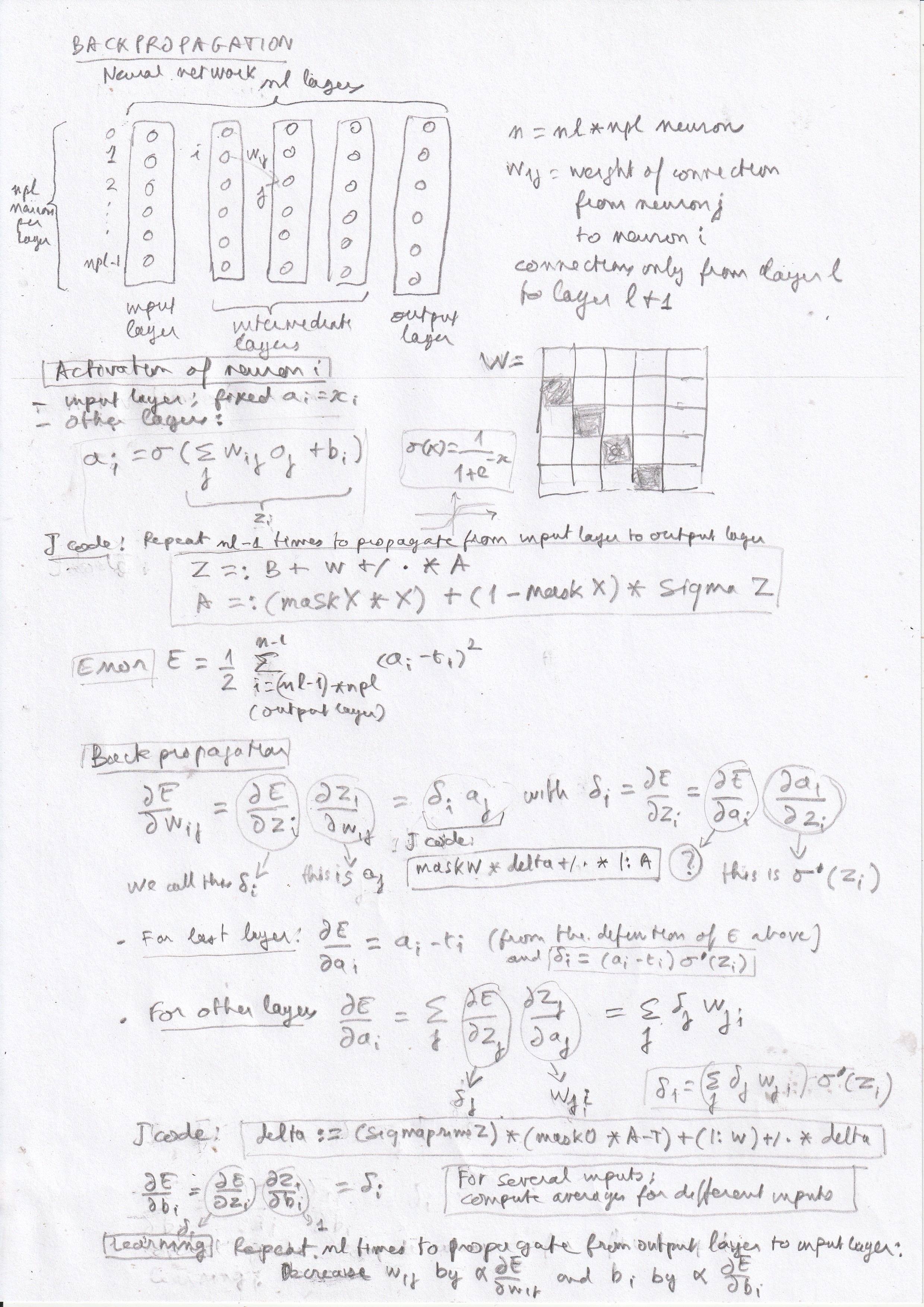

Backpropagation or backward propagation of errorsis a method for tuning the connection weights of a neural network by minimizing the error, the distance between the outputs given by the network and the expected outputs, using gradient descent.

Usually, the neural network is structured in layers, with connections only from neurons in one layer to neurons in the following layer. We will have a different approach, considering a priori that any connection scheme is possible, and introducing the layered structure as a particular connection scheme, represented by a matrix maskW in J code, containing 1 for existing connections and 0 for neurons that are not connected.

Links :

|

J code :

|