View file src/colab/copie_de_zero_shot_object_detection_with_grounding_dino.py - Download

# -*- coding: utf-8 -*-

"""Copie de zero-shot-object-detection-with-grounding-dino.ipynb

Automatically generated by Colab.

Original file is located at

https://colab.research.google.com/drive/1dLWbwub0OKzGaq86waji0tq1mu48OEPb

https://colab.research.google.com/github/roboflow-ai/notebooks/blob/main/notebooks/zero-shot-object-detection-with-grounding-dino.ipynb

[](https://github.com/roboflow/notebooks)

# Zero-Shot Object Detection with Grounding DINO

---

[](https://colab.research.google.com/github/roboflow-ai/notebooks/blob/main/notebooks/zero-shot-object-detection-with-grounding-dino.ipynb) [](https://github.com/IDEA-Research/GroundingDINO) [](https://arxiv.org/abs/2303.05499)

Grounding DINO can detect **arbitrary objects** with human inputs such as category names or referring expressions. The key solution of open-set object detection is introducing language to a closed-set detector DINO. for open-set concept generalization. If you want to learn more visit official GitHub [repository](https://github.com/IDEA-Research/GroundingDINO) and read the [paper](https://arxiv.org/abs/2303.05499).

## Complementary Materials

---

[](https://blog.roboflow.com/grounding-dino-zero-shot-object-detection) [](https://youtu.be/cMa77r3YrDk)

We recommend that you follow along in this notebook while reading the blog post on Grounding DINO. We will talk about the advantages of Grounding DINO, analyze the model architecture, and provide real prompt examples.

## ⚠️ Disclaimer

Grounding DINO codebase is still under development. If you experience any problems with launching the notebook, please let us know and create [issues](https://github.com/roboflow/notebooks/issues) on our GitHub.

## Pro Tip: Use GPU Acceleration

If you are running this notebook in Google Colab, navigate to `Edit` -> `Notebook settings` -> `Hardware accelerator`, set it to `GPU`, and then click `Save`. This will ensure your notebook uses a GPU, which will significantly speed up model training times.

## Steps in this Tutorial

In this tutorial, we are going to cover:

- Before you start

- Install Grounding DINO 🦕

- Download Grounding DINO Weights 🏋️

- Download Example Data

- Load Grounding DINO Model

- Grounding DINO Demo

- Grounding DINO with Roboflow Dataset

- 🏆 Congratulations

**Let's begin!**

## Before you start

Let's make sure that we have access to GPU. We can use `nvidia-smi` command to do that. In case of any problems navigate to `Edit` -> `Notebook settings` -> `Hardware accelerator`, set it to `GPU`, and then click `Save`.

"""

!nvidia-smi

import os

HOME = os.getcwd()

print(HOME)

"""## Install Grounding DINO 🦕"""

# Commented out IPython magic to ensure Python compatibility.

# %cd {HOME}

!git clone https://github.com/IDEA-Research/GroundingDINO.git

# %cd {HOME}/GroundingDINO

!pip install -q -e .

!pip install -q roboflow

import os

CONFIG_PATH = os.path.join(HOME, "GroundingDINO/groundingdino/config/GroundingDINO_SwinT_OGC.py")

print(CONFIG_PATH, "; exist:", os.path.isfile(CONFIG_PATH))

"""## Download Grounding DINO Weights 🏋️"""

# Commented out IPython magic to ensure Python compatibility.

# %cd {HOME}

!mkdir {HOME}/weights

# %cd {HOME}/weights

!wget -q https://github.com/IDEA-Research/GroundingDINO/releases/download/v0.1.0-alpha/groundingdino_swint_ogc.pth

import os

WEIGHTS_NAME = "groundingdino_swint_ogc.pth"

WEIGHTS_PATH = os.path.join(HOME, "weights", WEIGHTS_NAME)

print(WEIGHTS_PATH, "; exist:", os.path.isfile(WEIGHTS_PATH))

"""## Download Example Data"""

# Commented out IPython magic to ensure Python compatibility.

# %cd {HOME}

!mkdir {HOME}/data

# %cd {HOME}/data

!wget -q https://media.roboflow.com/notebooks/examples/dog.jpeg

!wget -q https://media.roboflow.com/notebooks/examples/dog-2.jpeg

!wget -q https://media.roboflow.com/notebooks/examples/dog-3.jpeg

!wget -q https://media.roboflow.com/notebooks/examples/dog-4.jpeg

"""## Load Grounding DINO Model"""

# Commented out IPython magic to ensure Python compatibility.

# %cd {HOME}/GroundingDINO

from groundingdino.util.inference import load_model, load_image, predict, annotate

model = load_model(CONFIG_PATH, WEIGHTS_PATH)

"""## Grounding DINO Demo"""

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

IMAGE_NAME = "dog-3.jpeg"

IMAGE_PATH = os.path.join(HOME, "data", IMAGE_NAME)

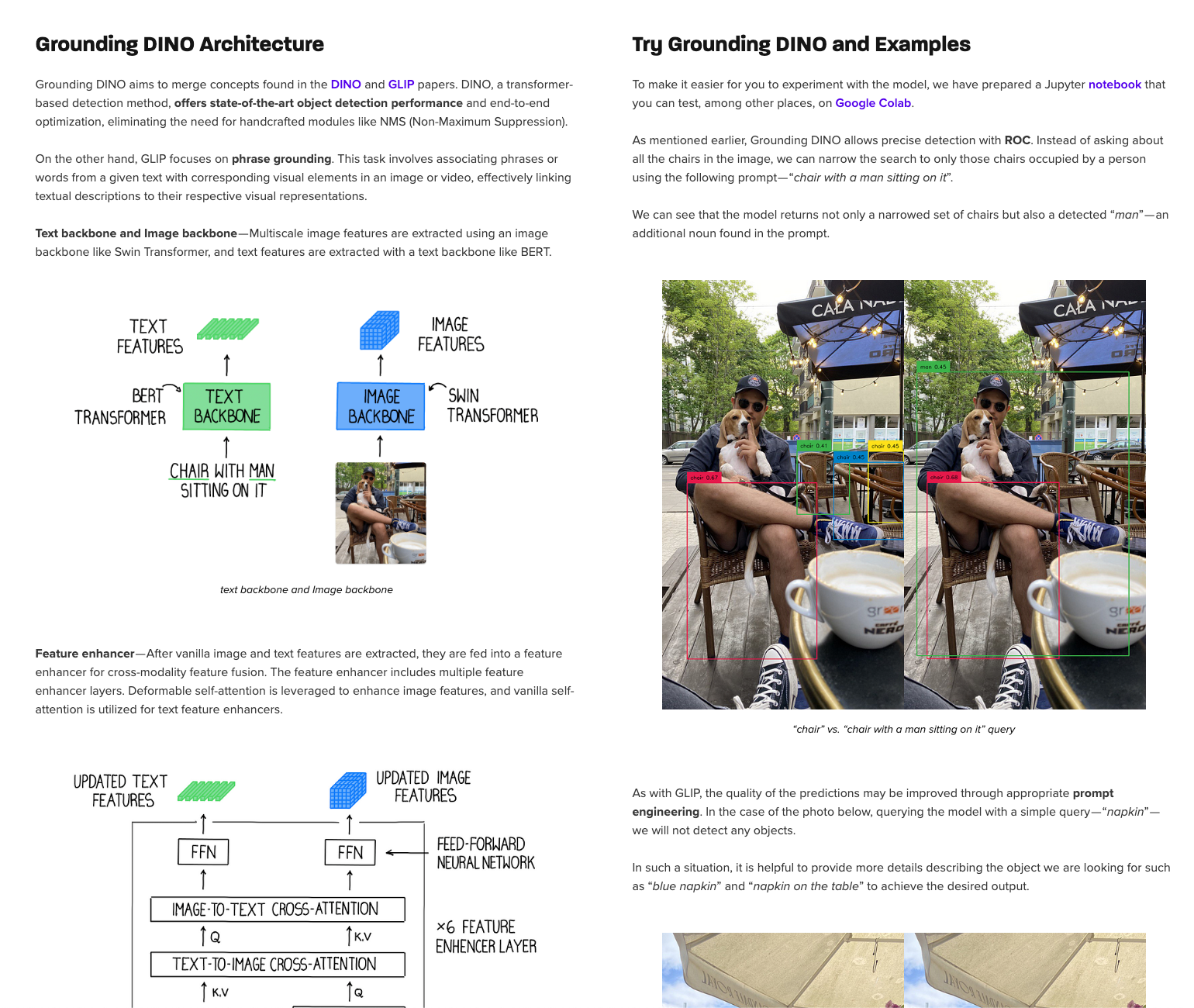

TEXT_PROMPT = "chair"

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(IMAGE_PATH)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

IMAGE_NAME = "dog-3.jpeg"

IMAGE_PATH = os.path.join(HOME, "data", IMAGE_NAME)

TEXT_PROMPT = "chair with man sitting on it"

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(IMAGE_PATH)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

IMAGE_NAME = "dog-3.jpeg"

IMAGE_PATH = os.path.join(HOME, "data", IMAGE_NAME)

TEXT_PROMPT = "chair, dog, table, shoe, light bulb, coffee, hat, glasses, car, tail, umbrella"

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(IMAGE_PATH)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

IMAGE_NAME = "dog-2.jpeg"

IMAGE_PATH = os.path.join(HOME, "data", IMAGE_NAME)

TEXT_PROMPT = "glass"

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(IMAGE_PATH)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

IMAGE_NAME = "dog-2.jpeg"

IMAGE_PATH = os.path.join(HOME, "data", IMAGE_NAME)

TEXT_PROMPT = "glass most to the right"

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(IMAGE_PATH)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

IMAGE_NAME = "dog-2.jpeg"

IMAGE_PATH = os.path.join(HOME, "data", IMAGE_NAME)

TEXT_PROMPT = "straw"

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(IMAGE_PATH)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

IMAGE_NAME = "dog-4.jpeg"

IMAGE_PATH = os.path.join(HOME, "data", IMAGE_NAME)

TEXT_PROMPT = "mens shadow"

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(IMAGE_PATH)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

"""## Grounding DINO with Roboflow Dataset"""

# Commented out IPython magic to ensure Python compatibility.

# %cd {HOME}

import roboflow

roboflow.login()

from random import randrange

from roboflow.core.dataset import Dataset

def pick_random_image(dataset: Dataset, subdirrectory: str = "valid") -> str:

image_directory_path = f"{dataset.location}/{subdirrectory}"

image_names = os.listdir(image_directory_path)

image_index = randrange(len(image_names))

image_name = image_names[image_index]

image_path = os.path.join(image_directory_path, image_name)

return image_path

from roboflow import Roboflow

rf = Roboflow()

project = rf.workspace("work-safe-project").project("safety-vest---v4")

dataset = project.version(3).download("coco")

TEXT_PROMPT = ", ".join(project.classes.keys())

TEXT_PROMPT

image_path = pick_random_image(dataset=dataset)

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(image_path)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

"""**NOTE:** The design of the prompt is very important. Try to be as accurate as possible. Avoid abbreviations."""

TEXT_PROMPT = "reflective safety vest, helmet, head, nonreflective safety vest"

# Commented out IPython magic to ensure Python compatibility.

import os

import supervision as sv

BOX_TRESHOLD = 0.35

TEXT_TRESHOLD = 0.25

image_source, image = load_image(image_path)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=TEXT_PROMPT,

box_threshold=BOX_TRESHOLD,

text_threshold=TEXT_TRESHOLD

)

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

# %matplotlib inline

sv.plot_image(annotated_frame, (16, 16))

"""## 🏆 Congratulations

### Learning Resources

Roboflow has produced many resources that you may find interesting as you advance your knowledge of computer vision:

- [Roboflow Notebooks](https://github.com/roboflow/notebooks): A repository of over 20 notebooks that walk through how to train custom models with a range of model types, from YOLOv7 to SegFormer.

- [Roboflow YouTube](https://www.youtube.com/c/Roboflow): Our library of videos featuring deep dives into the latest in computer vision, detailed tutorials that accompany our notebooks, and more.

- [Roboflow Discuss](https://discuss.roboflow.com/): Have a question about how to do something on Roboflow? Ask your question on our discussion forum.

- [Roboflow Models](https://roboflow.com): Learn about state-of-the-art models and their performance. Find links and tutorials to guide your learning.

### Convert data formats

Roboflow provides free utilities to convert data between dozens of popular computer vision formats. Check out [Roboflow Formats](https://roboflow.com/formats) to find tutorials on how to convert data between formats in a few clicks.

### Connect computer vision to your project logic

[Roboflow Templates](https://roboflow.com/templates) is a public gallery of code snippets that you can use to connect computer vision to your project logic. Code snippets range from sending emails after inference to measuring object distance between detections.

"""