View file src/colab/05b_cifar10_resnet.py - Download

# -*- coding: utf-8 -*-

"""05b-cifar10-resnet.ipynb

Automatically generated by Colab.

Original file is located at

https://colab.research.google.com/drive/1AnAW4nMEymIp368XVtHk0DUod4wYlmCx

Source : https://jovian.ai/aakashns/05b-cifar10-resnet

# Classifying CIFAR10 images using ResNets, Regularization and Data Augmentation in PyTorch

_A.K.A. Training an image classifier from scratch to over 90% accuracy in less than 5 minutes on a single GPU_

### Part 6 of "Deep Learning with Pytorch: Zero to GANs"

This tutorial series is a hands-on beginner-friendly introduction to deep learning using [PyTorch](https://pytorch.org), an open-source neural networks library. These tutorials take a practical and coding-focused approach. The best way to learn the material is to execute the code and experiment with it yourself. Check out the full series here:

1. [PyTorch Basics: Tensors & Gradients](https://jovian.ai/aakashns/01-pytorch-basics)

2. [Gradient Descent & Linear Regression](https://jovian.ai/aakashns/02-linear-regression)

3. [Working with Images & Logistic Regression](https://jovian.ai/aakashns/03-logistic-regression)

4. [Training Deep Neural Networks on a GPU](https://jovian.ai/aakashns/04-feedforward-nn)

5. [Image Classification using Convolutional Neural Networks](https://jovian.ai/aakashns/05-cifar10-cnn)

6. [Data Augmentation, Regularization and ResNets](https://jovian.ai/aakashns/05b-cifar10-resnet)

7. [Generating Images using Generative Adversarial Networks](https://jovian.ai/aakashns/06b-anime-dcgan/)

In this tutorial, we'll use the following techniques to train a state-of-the-art model in less than 5 minutes to achieve over 90% accuracy in classifying images from the CIFAR10 dataset:

- Data normalization

- Data augmentation

- Residual connections

- Batch normalization

- Learning rate scheduling

- Weight Decay

- Gradient clipping

- Adam optimizer

### How to run the code

This tutorial is an executable [Jupyter notebook](https://jupyter.org) hosted on [Jovian](https://www.jovian.ai). You can _run_ this tutorial and experiment with the code examples in a couple of ways: *using free online resources* (recommended) or *on your computer*.

#### Option 1: Running using free online resources (1-click, recommended)

The easiest way to start executing the code is to click the **Run** button at the top of this page and select **Run on Colab**. [Google Colab](https://colab.research.google.com) is a free online platform for running Jupyter notebooks using Google's cloud infrastructure. You can also select "Run on Binder" or "Run on Kaggle" if you face issues running the notebook on Google Colab.

#### Option 2: Running on your computer locally

To run the code on your computer locally, you'll need to set up [Python](https://www.python.org), download the notebook and install the required libraries. We recommend using the [Conda](https://docs.conda.io/projects/conda/en/latest/user-guide/install/) distribution of Python. Click the **Run** button at the top of this page, select the **Run Locally** option, and follow the instructions.

### Using a GPU for faster training

You can use a [Graphics Processing Unit](https://en.wikipedia.org/wiki/Graphics_processing_unit) (GPU) to train your models faster if your execution platform is connected to a GPU manufactured by NVIDIA. Follow these instructions to use a GPU on the platform of your choice:

* _Google Colab_: Use the menu option "Runtime > Change Runtime Type" and select "GPU" from the "Hardware Accelerator" dropdown.

* _Kaggle_: In the "Settings" section of the sidebar, select "GPU" from the "Accelerator" dropdown. Use the button on the top-right to open the sidebar.

* _Binder_: Notebooks running on Binder cannot use a GPU, as the machines powering Binder aren't connected to any GPUs.

* _Linux_: If your laptop/desktop has an NVIDIA GPU (graphics card), make sure you have installed the [NVIDIA CUDA drivers](https://docs.nvidia.com/cuda/cuda-installation-guide-linux/index.html).

* _Windows_: If your laptop/desktop has an NVIDIA GPU (graphics card), make sure you have installed the [NVIDIA CUDA drivers](https://docs.nvidia.com/cuda/cuda-installation-guide-microsoft-windows/index.html).

* _macOS_: macOS is not compatible with NVIDIA GPUs

If you do not have access to a GPU or aren't sure what it is, don't worry, you can execute all the code in this tutorial just fine without a GPU.

Let's begin by installing and importing the required libraries.

"""

# Uncomment and run the appropriate command for your operating system, if required

# No installation is reqiured on Google Colab / Kaggle notebooks

# Linux / Binder / Windows (No GPU)

# !pip install numpy matplotlib torch==1.7.0+cpu torchvision==0.8.1+cpu torchaudio==0.7.0 -f https://download.pytorch.org/whl/torch_stable.html

# Linux / Windows (GPU)

# pip install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html

# MacOS (NO GPU)

# !pip install numpy matplotlib torch torchvision torchaudio

# Commented out IPython magic to ensure Python compatibility.

import os

import torch

import torchvision

import tarfile

import torch.nn as nn

import numpy as np

import torch.nn.functional as F

from torchvision.datasets.utils import download_url

from torchvision.datasets import ImageFolder

from torch.utils.data import DataLoader

import torchvision.transforms as tt

from torch.utils.data import random_split

from torchvision.utils import make_grid

import matplotlib

import matplotlib.pyplot as plt

# %matplotlib inline

matplotlib.rcParams['figure.facecolor'] = '#ffffff'

project_name='05b-cifar10-resnet'

"""## Preparing the CIFAR10 Dataset

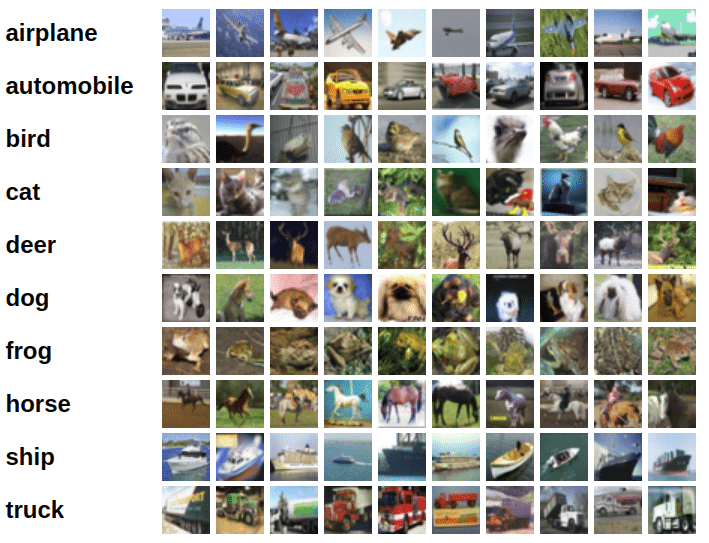

This notebook is an extension to the tutorial [Image Classification using CNNs in PyTorch](https://jovian.ai/aakashns/05-cifar10-cnn), where we trained a deep convolutional neural network to classify images from the CIFAR10 dataset with around 75% accuracy. Here are some images from the dataset:

Let's begin by downloading the dataset and creating PyTorch datasets to load the data, just as we did in the previous tutorial.

"""

from torchvision.datasets.utils import download_url

# Dowload the dataset

dataset_url = "https://s3.amazonaws.com/fast-ai-imageclas/cifar10.tgz"

download_url(dataset_url, '.')

# Extract from archive

with tarfile.open('./cifar10.tgz', 'r:gz') as tar:

tar.extractall(path='./data')

# Look into the data directory

data_dir = './data/cifar10'

print(os.listdir(data_dir))

classes = os.listdir(data_dir + "/train")

print(classes)

"""We can create training and validation datasets using the `ImageFolder` class from `torchvision`. In addition to the `ToTensor` transform, we'll also apply some other transforms to the images. There are a few important changes we'll make while creating PyTorch datasets for training and validation:

1. **Use test set for validation**: Instead of setting aside a fraction (e.g. 10%) of the data from the training set for validation, we'll simply use the test set as our validation set. This just gives a little more data to train with. In general, once you have picked the best model architecture & hypeparameters using a fixed validation set, it is a good idea to retrain the same model on the entire dataset just to give it a small final boost in performance.

2. **Channel-wise data normalization**: We will normalize the image tensors by subtracting the mean and dividing by the standard deviation across each channel. As a result, the mean of the data across each channel is 0, and standard deviation is 1. Normalizing the data prevents the values from any one channel from disproportionately affecting the losses and gradients while training, simply by having a higher or wider range of values that others.

3. **Randomized data augmentations**: We will apply randomly chosen transformations while loading images from the training dataset. Specifically, we will pad each image by 4 pixels, and then take a random crop of size 32 x 32 pixels, and then flip the image horizontally with a 50% probability. Since the transformation will be applied randomly and dynamically each time a particular image is loaded, the model sees slightly different images in each epoch of training, which allows it generalize better.

"""

# Data transforms (normalization & data augmentation)

stats = ((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

train_tfms = tt.Compose([tt.RandomCrop(32, padding=4, padding_mode='reflect'),

tt.RandomHorizontalFlip(),

# tt.RandomRotate

# tt.RandomResizedCrop(256, scale=(0.5,0.9), ratio=(1, 1)),

# tt.ColorJitter(brightness=0.1, contrast=0.1, saturation=0.1, hue=0.1),

tt.ToTensor(),

tt.Normalize(*stats,inplace=True)])

valid_tfms = tt.Compose([tt.ToTensor(), tt.Normalize(*stats)])

# PyTorch datasets

train_ds = ImageFolder(data_dir+'/train', train_tfms)

valid_ds = ImageFolder(data_dir+'/test', valid_tfms)

"""Next, we can create data loaders for retrieving images in batches. We'll use a relatively large batch size of 500 to utlize a larger portion of the GPU RAM. You can try reducing the batch size & restarting the kernel if you face an "out of memory" error."""

batch_size = 400

# PyTorch data loaders

train_dl = DataLoader(train_ds, batch_size, shuffle=True, num_workers=3, pin_memory=True)

valid_dl = DataLoader(valid_ds, batch_size*2, num_workers=3, pin_memory=True)

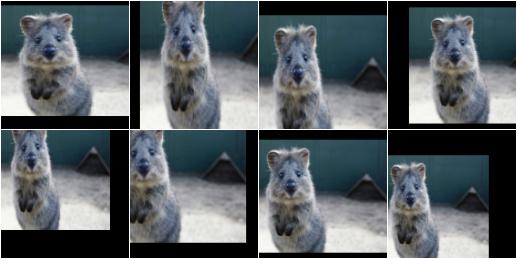

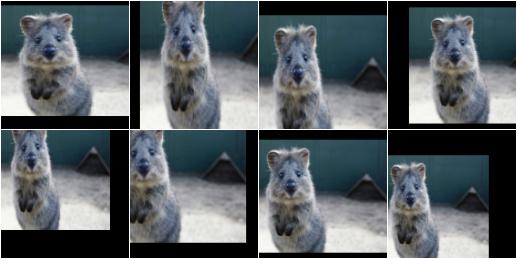

"""Let's take a look at some sample images from the training dataloader. To display the images, we'll need to _denormalize_ the pixels values to bring them back into the range `(0,1)`."""

def denormalize(images, means, stds):

means = torch.tensor(means).reshape(1, 3, 1, 1)

stds = torch.tensor(stds).reshape(1, 3, 1, 1)

return images * stds + means

def show_batch(dl):

for images, labels in dl:

fig, ax = plt.subplots(figsize=(12, 12))

ax.set_xticks([]); ax.set_yticks([])

denorm_images = denormalize(images, *stats)

ax.imshow(make_grid(denorm_images[:64], nrow=8).permute(1, 2, 0).clamp(0,1))

break

show_batch(train_dl)

"""The colors seem out of place because of the normalization. Note that normalization is also applied during inference. If you look closely, you can see the cropping and reflection padding in some of the images. Horizontal flip is a bit difficult to detect from visual inspection.

## Using a GPU

To seamlessly use a GPU, if one is available, we define a couple of helper functions (`get_default_device` & `to_device`) and a helper class `DeviceDataLoader` to move our model & data to the GPU as required. These are described in more detail in a [previous tutorial](https://jovian.ml/aakashns/04-feedforward-nn#C21).

"""

def get_default_device():

"""Pick GPU if available, else CPU"""

if torch.cuda.is_available():

return torch.device('cuda')

else:

return torch.device('cpu')

def to_device(data, device):

"""Move tensor(s) to chosen device"""

if isinstance(data, (list,tuple)):

return [to_device(x, device) for x in data]

return data.to(device, non_blocking=True)

class DeviceDataLoader():

"""Wrap a dataloader to move data to a device"""

def __init__(self, dl, device):

self.dl = dl

self.device = device

def __iter__(self):

"""Yield a batch of data after moving it to device"""

for b in self.dl:

yield to_device(b, self.device)

def __len__(self):

"""Number of batches"""

return len(self.dl)

"""Based on where you're running this notebook, your default device could be a CPU (`torch.device('cpu')`) or a GPU (`torch.device('cuda')`)"""

device = get_default_device()

device

"""We can now wrap our training and validation data loaders using `DeviceDataLoader` for automatically transferring batches of data to the GPU (if available)."""

train_dl = DeviceDataLoader(train_dl, device)

valid_dl = DeviceDataLoader(valid_dl, device)

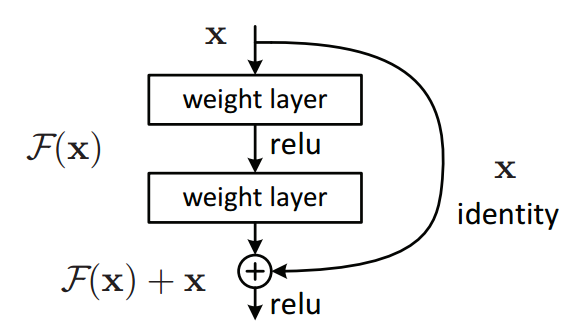

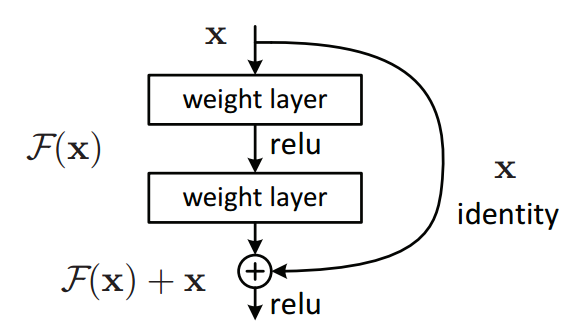

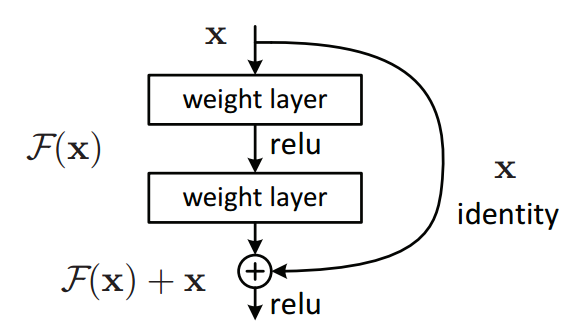

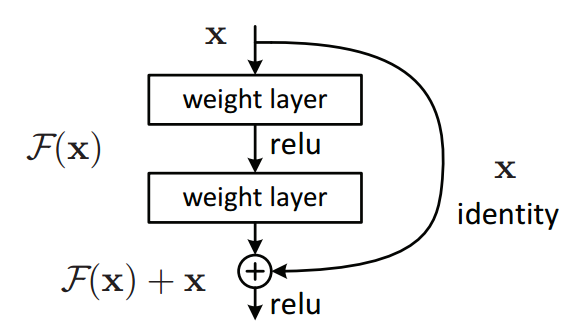

"""## Model with Residual Blocks and Batch Normalization

One of the key changes to our CNN model this time is the addition of the resudial block, which adds the original input back to the output feature map obtained by passing the input through one or more convolutional layers.

Here is a very simple Residual block:

"""

class SimpleResidualBlock(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3, stride=1, padding=1)

self.relu1 = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3, stride=1, padding=1)

self.relu2 = nn.ReLU()

def forward(self, x):

out = self.conv1(x)

out = self.relu1(out)

out = self.conv2(out)

return self.relu2(out) + x # ReLU can be applied before or after adding the input

simple_resnet = to_device(SimpleResidualBlock(), device)

for images, labels in train_dl:

out = simple_resnet(images)

print(out.shape)

break

del simple_resnet, images, labels

torch.cuda.empty_cache()

"""This seeming small change produces a drastic improvement in the performance of the model. Also, after each convolutional layer, we'll add a batch normalization layer, which normalizes the outputs of the previous layer.

Go through the following blog posts to learn more:

* Why and how residual blocks work: https://towardsdatascience.com/residual-blocks-building-blocks-of-resnet-fd90ca15d6ec

* Batch normalization and dropout explained: https://towardsdatascience.com/batch-normalization-and-dropout-in-neural-networks-explained-with-pytorch-47d7a8459bcd

We will use the ResNet9 architecture, as described in [this blog series](https://www.myrtle.ai/2018/09/24/how_to_train_your_resnet/) :

"""

def accuracy(outputs, labels):

_, preds = torch.max(outputs, dim=1)

return torch.tensor(torch.sum(preds == labels).item() / len(preds))

class ImageClassificationBase(nn.Module):

def training_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

return loss

def validation_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

acc = accuracy(out, labels) # Calculate accuracy

return {'val_loss': loss.detach(), 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

epoch_loss = torch.stack(batch_losses).mean() # Combine losses

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean() # Combine accuracies

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch [{}], last_lr: {:.5f}, train_loss: {:.4f}, val_loss: {:.4f}, val_acc: {:.4f}".format(

epoch, result['lrs'][-1], result['train_loss'], result['val_loss'], result['val_acc']))

def conv_block(in_channels, out_channels, pool=False):

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)]

if pool: layers.append(nn.MaxPool2d(2))

return nn.Sequential(*layers)

class ResNet9(ImageClassificationBase):

def __init__(self, in_channels, num_classes):

super().__init__()

self.conv1 = conv_block(in_channels, 64)

self.conv2 = conv_block(64, 128, pool=True)

self.res1 = nn.Sequential(conv_block(128, 128), conv_block(128, 128))

self.conv3 = conv_block(128, 256, pool=True)

self.conv4 = conv_block(256, 512, pool=True)

self.res2 = nn.Sequential(conv_block(512, 512), conv_block(512, 512))

self.classifier = nn.Sequential(nn.MaxPool2d(4),

nn.Flatten(),

nn.Dropout(0.2),

nn.Linear(512, num_classes))

def forward(self, xb):

out = self.conv1(xb)

out = self.conv2(out)

out = self.res1(out) + out

out = self.conv3(out)

out = self.conv4(out)

out = self.res2(out) + out

out = self.classifier(out)

return out

model = to_device(ResNet9(3, 10), device)

model

"""## Training the model

Before we train the model, we're going to make a bunch of small but important improvements to our `fit` function:

* **Learning rate scheduling**: Instead of using a fixed learning rate, we will use a learning rate scheduler, which will change the learning rate after every batch of training. There are many strategies for varying the learning rate during training, and the one we'll use is called the **"One Cycle Learning Rate Policy"**, which involves starting with a low learning rate, gradually increasing it batch-by-batch to a high learning rate for about 30% of epochs, then gradually decreasing it to a very low value for the remaining epochs. Learn more: https://sgugger.github.io/the-1cycle-policy.html

* **Weight decay**: We also use weight decay, which is yet another regularization technique which prevents the weights from becoming too large by adding an additional term to the loss function.Learn more: https://towardsdatascience.com/this-thing-called-weight-decay-a7cd4bcfccab

* **Gradient clipping**: Apart from the layer weights and outputs, it also helpful to limit the values of gradients to a small range to prevent undesirable changes in parameters due to large gradient values. This simple yet effective technique is called gradient clipping. Learn more: https://towardsdatascience.com/what-is-gradient-clipping-b8e815cdfb48

Let's define a `fit_one_cycle` function to incorporate these changes. We'll also record the learning rate used for each batch.

"""

@torch.no_grad()

def evaluate(model, val_loader):

model.eval()

outputs = [model.validation_step(batch) for batch in val_loader]

return model.validation_epoch_end(outputs)

def get_lr(optimizer):

for param_group in optimizer.param_groups:

return param_group['lr']

def fit_one_cycle(epochs, max_lr, model, train_loader, val_loader,

weight_decay=0, grad_clip=None, opt_func=torch.optim.SGD):

torch.cuda.empty_cache()

history = []

# Set up cutom optimizer with weight decay

optimizer = opt_func(model.parameters(), max_lr, weight_decay=weight_decay)

# Set up one-cycle learning rate scheduler

sched = torch.optim.lr_scheduler.OneCycleLR(optimizer, max_lr, epochs=epochs,

steps_per_epoch=len(train_loader))

for epoch in range(epochs):

# Training Phase

model.train()

train_losses = []

lrs = []

for batch in train_loader:

loss = model.training_step(batch)

train_losses.append(loss)

loss.backward()

# Gradient clipping

if grad_clip:

nn.utils.clip_grad_value_(model.parameters(), grad_clip)

optimizer.step()

optimizer.zero_grad()

# Record & update learning rate

lrs.append(get_lr(optimizer))

sched.step()

# Validation phase

result = evaluate(model, val_loader)

result['train_loss'] = torch.stack(train_losses).mean().item()

result['lrs'] = lrs

model.epoch_end(epoch, result)

history.append(result)

return history

history = [evaluate(model, valid_dl)]

history

"""We're now ready to train our model. Instead of SGD (stochastic gradient descent), we'll use the Adam optimizer which uses techniques like momentum and adaptive learning rates for faster training. You can learn more about optimizers here: https://ruder.io/optimizing-gradient-descent/index.html"""

epochs = 8

max_lr = 0.01

grad_clip = 0.1

weight_decay = 1e-4

opt_func = torch.optim.Adam

# Commented out IPython magic to ensure Python compatibility.

# %%time

# history += fit_one_cycle(epochs, max_lr, model, train_dl, valid_dl,

# grad_clip=grad_clip,

# weight_decay=weight_decay,

# opt_func=opt_func)

train_time='4:24'

"""Our model trained to over **90% accuracy in under 5 minutes**! Try playing around with the data augmentations, network architecture & hyperparameters to achive the following results:

1. 94% accuracy in under 10 minutes (easy)

2. 90% accuracy in under 2.5 minutes (intermediate)

3. 94% accuracy in under 5 minutes (hard)

Let's plot the valdation set accuracies to study how the model improves over time.

"""

def plot_accuracies(history):

accuracies = [x['val_acc'] for x in history]

plt.plot(accuracies, '-x')

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.title('Accuracy vs. No. of epochs');

plot_accuracies(history)

"""We can also plot the training and validation losses to study the trend."""

def plot_losses(history):

train_losses = [x.get('train_loss') for x in history]

val_losses = [x['val_loss'] for x in history]

plt.plot(train_losses, '-bx')

plt.plot(val_losses, '-rx')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.legend(['Training', 'Validation'])

plt.title('Loss vs. No. of epochs');

plot_losses(history)

"""It's clear from the trend that our model isn't overfitting to the training data just yet. Try removing batch normalization, data augmentation and residual layers one by one to study their effect on overfitting.

Finally, let's visualize how the learning rate changed over time, batch-by-batch over all the epochs.

"""

def plot_lrs(history):

lrs = np.concatenate([x.get('lrs', []) for x in history])

plt.plot(lrs)

plt.xlabel('Batch no.')

plt.ylabel('Learning rate')

plt.title('Learning Rate vs. Batch no.');

plot_lrs(history)

"""As expected, the learning rate starts at a low value, and gradually increases for 30% of the iterations to a maximum value of `0.01`, and then gradually decreases to a very small value.

## Testing with individual images

While we have been tracking the overall accuracy of a model so far, it's also a good idea to look at model's results on some sample images. Let's test out our model with some images from the predefined test dataset of 10000 images.

"""

def predict_image(img, model):

# Convert to a batch of 1

xb = to_device(img.unsqueeze(0), device)

# Get predictions from model

yb = model(xb)

# Pick index with highest probability

_, preds = torch.max(yb, dim=1)

# Retrieve the class label

return train_ds.classes[preds[0].item()]

img, label = valid_ds[0]

plt.imshow(img.permute(1, 2, 0).clamp(0, 1))

print('Label:', train_ds.classes[label], ', Predicted:', predict_image(img, model))

img, label = valid_ds[1002]

plt.imshow(img.permute(1, 2, 0))

print('Label:', valid_ds.classes[label], ', Predicted:', predict_image(img, model))

img, label = valid_ds[6153]

plt.imshow(img.permute(1, 2, 0))

print('Label:', train_ds.classes[label], ', Predicted:', predict_image(img, model))

"""Identifying where our model performs poorly can help us improve the model, by collecting more training data, increasing/decreasing the complexity of the model, and changing the hypeparameters.

## Save and Commit

Let's save the weights of the model, record the hyperparameters, and commit our experiment to Jovian. As you try different ideas, make sure to record every experiment so you can look back and analyze the results.

"""

torch.save(model.state_dict(), 'cifar10-resnet9.pth')

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# !pip install jovian --upgrade --quiet

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# import jovian

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.reset()

# jovian.log_hyperparams(arch='resnet9',

# epochs=epochs,

# lr=max_lr,

# scheduler='one-cycle',

# weight_decay=weight_decay,

# grad_clip=grad_clip,

# opt=opt_func.__name__)

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.log_metrics(val_loss=history[-1]['val_loss'],

# val_acc=history[-1]['val_acc'],

# train_loss=history[-1]['train_loss'],

# time=train_time)

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.commit(project=project_name, environment=None, outputs=['cifar10-resnet9.pth'])

"""## Summary and Further Reading

You are now ready to train state-of-the-art deep learning models from scratch. Try working on a project on your own by following these guidelines: https://jovian.ai/learn/deep-learning-with-pytorch-zero-to-gans/assignment/course-project

Here's a summary of the different techniques used in this tutorial to improve our model performance and reduce the training time:

* **Data normalization**: We normalized the image tensors by subtracting the mean and dividing by the standard deviation of pixels across each channel. Normalizing the data prevents the pixel values from any one channel from disproportionately affecting the losses and gradients. [Learn more](https://medium.com/@ml_kid/what-is-transform-and-transform-normalize-lesson-4-neural-networks-in-pytorch-ca97842336bd)

* **Data augmentation**: We applied random transformations while loading images from the training dataset. Specifically, we will pad each image by 4 pixels, and then take a random crop of size 32 x 32 pixels, and then flip the image horizontally with a 50% probability. [Learn more](https://www.analyticsvidhya.com/blog/2019/12/image-augmentation-deep-learning-pytorch/)

* **Residual connections**: One of the key changes to our CNN model was the addition of the resudial block, which adds the original input back to the output feature map obtained by passing the input through one or more convolutional layers. We used the ResNet9 architecture [Learn more](https://towardsdatascience.com/residual-blocks-building-blocks-of-resnet-fd90ca15d6ec).

* **Batch normalization**: After each convolutional layer, we added a batch normalization layer, which normalizes the outputs of the previous layer. This is somewhat similar to data normalization, except it's applied to the outputs of a layer, and the mean and standard deviation are learned parameters. [Learn more](https://towardsdatascience.com/batch-normalization-and-dropout-in-neural-networks-explained-with-pytorch-47d7a8459bcd)

* **Learning rate scheduling**: Instead of using a fixed learning rate, we will use a learning rate scheduler, which will change the learning rate after every batch of training. There are [many strategies](https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate) for varying the learning rate during training, and we used the "One Cycle Learning Rate Policy". [Learn more](https://sgugger.github.io/the-1cycle-policy.html)

* **Weight Decay**: We added weight decay to the optimizer, yet another regularization technique which prevents the weights from becoming too large by adding an additional term to the loss function. [Learn more](https://towardsdatascience.com/this-thing-called-weight-decay-a7cd4bcfccab)

* **Gradient clipping**: We also added gradient clippint, which helps limit the values of gradients to a small range to prevent undesirable changes in model parameters due to large gradient values during training. [Learn more.](https://towardsdatascience.com/what-is-gradient-clipping-b8e815cdfb48#63e0)

* **Adam optimizer**: Instead of SGD (stochastic gradient descent), we used the Adam optimizer which uses techniques like momentum and adaptive learning rates for faster training. There are many other optimizers to choose froma and experiment with. [Learn more.](https://ruder.io/optimizing-gradient-descent/index.html)

As an exercise, you should try applying each technique independently and see how much each one affects the performance and training time. As you try different experiments, you will start to cultivate the intuition for picking the right architectures, data augmentation & regularization techniques.

You are now ready to move on to the next tutorial in this series: [Generating Images using Generative Adversarial Networks](https://jovian.ai/aakashns/06b-anime-dcgan/)

"""

3. **Randomized data augmentations**: We will apply randomly chosen transformations while loading images from the training dataset. Specifically, we will pad each image by 4 pixels, and then take a random crop of size 32 x 32 pixels, and then flip the image horizontally with a 50% probability. Since the transformation will be applied randomly and dynamically each time a particular image is loaded, the model sees slightly different images in each epoch of training, which allows it generalize better.

"""

# Data transforms (normalization & data augmentation)

stats = ((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

train_tfms = tt.Compose([tt.RandomCrop(32, padding=4, padding_mode='reflect'),

tt.RandomHorizontalFlip(),

# tt.RandomRotate

# tt.RandomResizedCrop(256, scale=(0.5,0.9), ratio=(1, 1)),

# tt.ColorJitter(brightness=0.1, contrast=0.1, saturation=0.1, hue=0.1),

tt.ToTensor(),

tt.Normalize(*stats,inplace=True)])

valid_tfms = tt.Compose([tt.ToTensor(), tt.Normalize(*stats)])

# PyTorch datasets

train_ds = ImageFolder(data_dir+'/train', train_tfms)

valid_ds = ImageFolder(data_dir+'/test', valid_tfms)

"""Next, we can create data loaders for retrieving images in batches. We'll use a relatively large batch size of 500 to utlize a larger portion of the GPU RAM. You can try reducing the batch size & restarting the kernel if you face an "out of memory" error."""

batch_size = 400

# PyTorch data loaders

train_dl = DataLoader(train_ds, batch_size, shuffle=True, num_workers=3, pin_memory=True)

valid_dl = DataLoader(valid_ds, batch_size*2, num_workers=3, pin_memory=True)

"""Let's take a look at some sample images from the training dataloader. To display the images, we'll need to _denormalize_ the pixels values to bring them back into the range `(0,1)`."""

def denormalize(images, means, stds):

means = torch.tensor(means).reshape(1, 3, 1, 1)

stds = torch.tensor(stds).reshape(1, 3, 1, 1)

return images * stds + means

def show_batch(dl):

for images, labels in dl:

fig, ax = plt.subplots(figsize=(12, 12))

ax.set_xticks([]); ax.set_yticks([])

denorm_images = denormalize(images, *stats)

ax.imshow(make_grid(denorm_images[:64], nrow=8).permute(1, 2, 0).clamp(0,1))

break

show_batch(train_dl)

"""The colors seem out of place because of the normalization. Note that normalization is also applied during inference. If you look closely, you can see the cropping and reflection padding in some of the images. Horizontal flip is a bit difficult to detect from visual inspection.

## Using a GPU

To seamlessly use a GPU, if one is available, we define a couple of helper functions (`get_default_device` & `to_device`) and a helper class `DeviceDataLoader` to move our model & data to the GPU as required. These are described in more detail in a [previous tutorial](https://jovian.ml/aakashns/04-feedforward-nn#C21).

"""

def get_default_device():

"""Pick GPU if available, else CPU"""

if torch.cuda.is_available():

return torch.device('cuda')

else:

return torch.device('cpu')

def to_device(data, device):

"""Move tensor(s) to chosen device"""

if isinstance(data, (list,tuple)):

return [to_device(x, device) for x in data]

return data.to(device, non_blocking=True)

class DeviceDataLoader():

"""Wrap a dataloader to move data to a device"""

def __init__(self, dl, device):

self.dl = dl

self.device = device

def __iter__(self):

"""Yield a batch of data after moving it to device"""

for b in self.dl:

yield to_device(b, self.device)

def __len__(self):

"""Number of batches"""

return len(self.dl)

"""Based on where you're running this notebook, your default device could be a CPU (`torch.device('cpu')`) or a GPU (`torch.device('cuda')`)"""

device = get_default_device()

device

"""We can now wrap our training and validation data loaders using `DeviceDataLoader` for automatically transferring batches of data to the GPU (if available)."""

train_dl = DeviceDataLoader(train_dl, device)

valid_dl = DeviceDataLoader(valid_dl, device)

"""## Model with Residual Blocks and Batch Normalization

One of the key changes to our CNN model this time is the addition of the resudial block, which adds the original input back to the output feature map obtained by passing the input through one or more convolutional layers.

Here is a very simple Residual block:

"""

class SimpleResidualBlock(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3, stride=1, padding=1)

self.relu1 = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3, stride=1, padding=1)

self.relu2 = nn.ReLU()

def forward(self, x):

out = self.conv1(x)

out = self.relu1(out)

out = self.conv2(out)

return self.relu2(out) + x # ReLU can be applied before or after adding the input

simple_resnet = to_device(SimpleResidualBlock(), device)

for images, labels in train_dl:

out = simple_resnet(images)

print(out.shape)

break

del simple_resnet, images, labels

torch.cuda.empty_cache()

"""This seeming small change produces a drastic improvement in the performance of the model. Also, after each convolutional layer, we'll add a batch normalization layer, which normalizes the outputs of the previous layer.

Go through the following blog posts to learn more:

* Why and how residual blocks work: https://towardsdatascience.com/residual-blocks-building-blocks-of-resnet-fd90ca15d6ec

* Batch normalization and dropout explained: https://towardsdatascience.com/batch-normalization-and-dropout-in-neural-networks-explained-with-pytorch-47d7a8459bcd

We will use the ResNet9 architecture, as described in [this blog series](https://www.myrtle.ai/2018/09/24/how_to_train_your_resnet/) :

"""

def accuracy(outputs, labels):

_, preds = torch.max(outputs, dim=1)

return torch.tensor(torch.sum(preds == labels).item() / len(preds))

class ImageClassificationBase(nn.Module):

def training_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

return loss

def validation_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

acc = accuracy(out, labels) # Calculate accuracy

return {'val_loss': loss.detach(), 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

epoch_loss = torch.stack(batch_losses).mean() # Combine losses

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean() # Combine accuracies

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch [{}], last_lr: {:.5f}, train_loss: {:.4f}, val_loss: {:.4f}, val_acc: {:.4f}".format(

epoch, result['lrs'][-1], result['train_loss'], result['val_loss'], result['val_acc']))

def conv_block(in_channels, out_channels, pool=False):

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)]

if pool: layers.append(nn.MaxPool2d(2))

return nn.Sequential(*layers)

class ResNet9(ImageClassificationBase):

def __init__(self, in_channels, num_classes):

super().__init__()

self.conv1 = conv_block(in_channels, 64)

self.conv2 = conv_block(64, 128, pool=True)

self.res1 = nn.Sequential(conv_block(128, 128), conv_block(128, 128))

self.conv3 = conv_block(128, 256, pool=True)

self.conv4 = conv_block(256, 512, pool=True)

self.res2 = nn.Sequential(conv_block(512, 512), conv_block(512, 512))

self.classifier = nn.Sequential(nn.MaxPool2d(4),

nn.Flatten(),

nn.Dropout(0.2),

nn.Linear(512, num_classes))

def forward(self, xb):

out = self.conv1(xb)

out = self.conv2(out)

out = self.res1(out) + out

out = self.conv3(out)

out = self.conv4(out)

out = self.res2(out) + out

out = self.classifier(out)

return out

model = to_device(ResNet9(3, 10), device)

model

"""## Training the model

Before we train the model, we're going to make a bunch of small but important improvements to our `fit` function:

* **Learning rate scheduling**: Instead of using a fixed learning rate, we will use a learning rate scheduler, which will change the learning rate after every batch of training. There are many strategies for varying the learning rate during training, and the one we'll use is called the **"One Cycle Learning Rate Policy"**, which involves starting with a low learning rate, gradually increasing it batch-by-batch to a high learning rate for about 30% of epochs, then gradually decreasing it to a very low value for the remaining epochs. Learn more: https://sgugger.github.io/the-1cycle-policy.html

* **Weight decay**: We also use weight decay, which is yet another regularization technique which prevents the weights from becoming too large by adding an additional term to the loss function.Learn more: https://towardsdatascience.com/this-thing-called-weight-decay-a7cd4bcfccab

* **Gradient clipping**: Apart from the layer weights and outputs, it also helpful to limit the values of gradients to a small range to prevent undesirable changes in parameters due to large gradient values. This simple yet effective technique is called gradient clipping. Learn more: https://towardsdatascience.com/what-is-gradient-clipping-b8e815cdfb48

Let's define a `fit_one_cycle` function to incorporate these changes. We'll also record the learning rate used for each batch.

"""

@torch.no_grad()

def evaluate(model, val_loader):

model.eval()

outputs = [model.validation_step(batch) for batch in val_loader]

return model.validation_epoch_end(outputs)

def get_lr(optimizer):

for param_group in optimizer.param_groups:

return param_group['lr']

def fit_one_cycle(epochs, max_lr, model, train_loader, val_loader,

weight_decay=0, grad_clip=None, opt_func=torch.optim.SGD):

torch.cuda.empty_cache()

history = []

# Set up cutom optimizer with weight decay

optimizer = opt_func(model.parameters(), max_lr, weight_decay=weight_decay)

# Set up one-cycle learning rate scheduler

sched = torch.optim.lr_scheduler.OneCycleLR(optimizer, max_lr, epochs=epochs,

steps_per_epoch=len(train_loader))

for epoch in range(epochs):

# Training Phase

model.train()

train_losses = []

lrs = []

for batch in train_loader:

loss = model.training_step(batch)

train_losses.append(loss)

loss.backward()

# Gradient clipping

if grad_clip:

nn.utils.clip_grad_value_(model.parameters(), grad_clip)

optimizer.step()

optimizer.zero_grad()

# Record & update learning rate

lrs.append(get_lr(optimizer))

sched.step()

# Validation phase

result = evaluate(model, val_loader)

result['train_loss'] = torch.stack(train_losses).mean().item()

result['lrs'] = lrs

model.epoch_end(epoch, result)

history.append(result)

return history

history = [evaluate(model, valid_dl)]

history

"""We're now ready to train our model. Instead of SGD (stochastic gradient descent), we'll use the Adam optimizer which uses techniques like momentum and adaptive learning rates for faster training. You can learn more about optimizers here: https://ruder.io/optimizing-gradient-descent/index.html"""

epochs = 8

max_lr = 0.01

grad_clip = 0.1

weight_decay = 1e-4

opt_func = torch.optim.Adam

# Commented out IPython magic to ensure Python compatibility.

# %%time

# history += fit_one_cycle(epochs, max_lr, model, train_dl, valid_dl,

# grad_clip=grad_clip,

# weight_decay=weight_decay,

# opt_func=opt_func)

train_time='4:24'

"""Our model trained to over **90% accuracy in under 5 minutes**! Try playing around with the data augmentations, network architecture & hyperparameters to achive the following results:

1. 94% accuracy in under 10 minutes (easy)

2. 90% accuracy in under 2.5 minutes (intermediate)

3. 94% accuracy in under 5 minutes (hard)

Let's plot the valdation set accuracies to study how the model improves over time.

"""

def plot_accuracies(history):

accuracies = [x['val_acc'] for x in history]

plt.plot(accuracies, '-x')

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.title('Accuracy vs. No. of epochs');

plot_accuracies(history)

"""We can also plot the training and validation losses to study the trend."""

def plot_losses(history):

train_losses = [x.get('train_loss') for x in history]

val_losses = [x['val_loss'] for x in history]

plt.plot(train_losses, '-bx')

plt.plot(val_losses, '-rx')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.legend(['Training', 'Validation'])

plt.title('Loss vs. No. of epochs');

plot_losses(history)

"""It's clear from the trend that our model isn't overfitting to the training data just yet. Try removing batch normalization, data augmentation and residual layers one by one to study their effect on overfitting.

Finally, let's visualize how the learning rate changed over time, batch-by-batch over all the epochs.

"""

def plot_lrs(history):

lrs = np.concatenate([x.get('lrs', []) for x in history])

plt.plot(lrs)

plt.xlabel('Batch no.')

plt.ylabel('Learning rate')

plt.title('Learning Rate vs. Batch no.');

plot_lrs(history)

"""As expected, the learning rate starts at a low value, and gradually increases for 30% of the iterations to a maximum value of `0.01`, and then gradually decreases to a very small value.

## Testing with individual images

While we have been tracking the overall accuracy of a model so far, it's also a good idea to look at model's results on some sample images. Let's test out our model with some images from the predefined test dataset of 10000 images.

"""

def predict_image(img, model):

# Convert to a batch of 1

xb = to_device(img.unsqueeze(0), device)

# Get predictions from model

yb = model(xb)

# Pick index with highest probability

_, preds = torch.max(yb, dim=1)

# Retrieve the class label

return train_ds.classes[preds[0].item()]

img, label = valid_ds[0]

plt.imshow(img.permute(1, 2, 0).clamp(0, 1))

print('Label:', train_ds.classes[label], ', Predicted:', predict_image(img, model))

img, label = valid_ds[1002]

plt.imshow(img.permute(1, 2, 0))

print('Label:', valid_ds.classes[label], ', Predicted:', predict_image(img, model))

img, label = valid_ds[6153]

plt.imshow(img.permute(1, 2, 0))

print('Label:', train_ds.classes[label], ', Predicted:', predict_image(img, model))

"""Identifying where our model performs poorly can help us improve the model, by collecting more training data, increasing/decreasing the complexity of the model, and changing the hypeparameters.

## Save and Commit

Let's save the weights of the model, record the hyperparameters, and commit our experiment to Jovian. As you try different ideas, make sure to record every experiment so you can look back and analyze the results.

"""

torch.save(model.state_dict(), 'cifar10-resnet9.pth')

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# !pip install jovian --upgrade --quiet

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# import jovian

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.reset()

# jovian.log_hyperparams(arch='resnet9',

# epochs=epochs,

# lr=max_lr,

# scheduler='one-cycle',

# weight_decay=weight_decay,

# grad_clip=grad_clip,

# opt=opt_func.__name__)

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.log_metrics(val_loss=history[-1]['val_loss'],

# val_acc=history[-1]['val_acc'],

# train_loss=history[-1]['train_loss'],

# time=train_time)

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.commit(project=project_name, environment=None, outputs=['cifar10-resnet9.pth'])

"""## Summary and Further Reading

You are now ready to train state-of-the-art deep learning models from scratch. Try working on a project on your own by following these guidelines: https://jovian.ai/learn/deep-learning-with-pytorch-zero-to-gans/assignment/course-project

Here's a summary of the different techniques used in this tutorial to improve our model performance and reduce the training time:

* **Data normalization**: We normalized the image tensors by subtracting the mean and dividing by the standard deviation of pixels across each channel. Normalizing the data prevents the pixel values from any one channel from disproportionately affecting the losses and gradients. [Learn more](https://medium.com/@ml_kid/what-is-transform-and-transform-normalize-lesson-4-neural-networks-in-pytorch-ca97842336bd)

* **Data augmentation**: We applied random transformations while loading images from the training dataset. Specifically, we will pad each image by 4 pixels, and then take a random crop of size 32 x 32 pixels, and then flip the image horizontally with a 50% probability. [Learn more](https://www.analyticsvidhya.com/blog/2019/12/image-augmentation-deep-learning-pytorch/)

* **Residual connections**: One of the key changes to our CNN model was the addition of the resudial block, which adds the original input back to the output feature map obtained by passing the input through one or more convolutional layers. We used the ResNet9 architecture [Learn more](https://towardsdatascience.com/residual-blocks-building-blocks-of-resnet-fd90ca15d6ec).

* **Batch normalization**: After each convolutional layer, we added a batch normalization layer, which normalizes the outputs of the previous layer. This is somewhat similar to data normalization, except it's applied to the outputs of a layer, and the mean and standard deviation are learned parameters. [Learn more](https://towardsdatascience.com/batch-normalization-and-dropout-in-neural-networks-explained-with-pytorch-47d7a8459bcd)

* **Learning rate scheduling**: Instead of using a fixed learning rate, we will use a learning rate scheduler, which will change the learning rate after every batch of training. There are [many strategies](https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate) for varying the learning rate during training, and we used the "One Cycle Learning Rate Policy". [Learn more](https://sgugger.github.io/the-1cycle-policy.html)

* **Weight Decay**: We added weight decay to the optimizer, yet another regularization technique which prevents the weights from becoming too large by adding an additional term to the loss function. [Learn more](https://towardsdatascience.com/this-thing-called-weight-decay-a7cd4bcfccab)

* **Gradient clipping**: We also added gradient clippint, which helps limit the values of gradients to a small range to prevent undesirable changes in model parameters due to large gradient values during training. [Learn more.](https://towardsdatascience.com/what-is-gradient-clipping-b8e815cdfb48#63e0)

* **Adam optimizer**: Instead of SGD (stochastic gradient descent), we used the Adam optimizer which uses techniques like momentum and adaptive learning rates for faster training. There are many other optimizers to choose froma and experiment with. [Learn more.](https://ruder.io/optimizing-gradient-descent/index.html)

As an exercise, you should try applying each technique independently and see how much each one affects the performance and training time. As you try different experiments, you will start to cultivate the intuition for picking the right architectures, data augmentation & regularization techniques.

You are now ready to move on to the next tutorial in this series: [Generating Images using Generative Adversarial Networks](https://jovian.ai/aakashns/06b-anime-dcgan/)

"""

3. **Randomized data augmentations**: We will apply randomly chosen transformations while loading images from the training dataset. Specifically, we will pad each image by 4 pixels, and then take a random crop of size 32 x 32 pixels, and then flip the image horizontally with a 50% probability. Since the transformation will be applied randomly and dynamically each time a particular image is loaded, the model sees slightly different images in each epoch of training, which allows it generalize better.

"""

# Data transforms (normalization & data augmentation)

stats = ((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

train_tfms = tt.Compose([tt.RandomCrop(32, padding=4, padding_mode='reflect'),

tt.RandomHorizontalFlip(),

# tt.RandomRotate

# tt.RandomResizedCrop(256, scale=(0.5,0.9), ratio=(1, 1)),

# tt.ColorJitter(brightness=0.1, contrast=0.1, saturation=0.1, hue=0.1),

tt.ToTensor(),

tt.Normalize(*stats,inplace=True)])

valid_tfms = tt.Compose([tt.ToTensor(), tt.Normalize(*stats)])

# PyTorch datasets

train_ds = ImageFolder(data_dir+'/train', train_tfms)

valid_ds = ImageFolder(data_dir+'/test', valid_tfms)

"""Next, we can create data loaders for retrieving images in batches. We'll use a relatively large batch size of 500 to utlize a larger portion of the GPU RAM. You can try reducing the batch size & restarting the kernel if you face an "out of memory" error."""

batch_size = 400

# PyTorch data loaders

train_dl = DataLoader(train_ds, batch_size, shuffle=True, num_workers=3, pin_memory=True)

valid_dl = DataLoader(valid_ds, batch_size*2, num_workers=3, pin_memory=True)

"""Let's take a look at some sample images from the training dataloader. To display the images, we'll need to _denormalize_ the pixels values to bring them back into the range `(0,1)`."""

def denormalize(images, means, stds):

means = torch.tensor(means).reshape(1, 3, 1, 1)

stds = torch.tensor(stds).reshape(1, 3, 1, 1)

return images * stds + means

def show_batch(dl):

for images, labels in dl:

fig, ax = plt.subplots(figsize=(12, 12))

ax.set_xticks([]); ax.set_yticks([])

denorm_images = denormalize(images, *stats)

ax.imshow(make_grid(denorm_images[:64], nrow=8).permute(1, 2, 0).clamp(0,1))

break

show_batch(train_dl)

"""The colors seem out of place because of the normalization. Note that normalization is also applied during inference. If you look closely, you can see the cropping and reflection padding in some of the images. Horizontal flip is a bit difficult to detect from visual inspection.

## Using a GPU

To seamlessly use a GPU, if one is available, we define a couple of helper functions (`get_default_device` & `to_device`) and a helper class `DeviceDataLoader` to move our model & data to the GPU as required. These are described in more detail in a [previous tutorial](https://jovian.ml/aakashns/04-feedforward-nn#C21).

"""

def get_default_device():

"""Pick GPU if available, else CPU"""

if torch.cuda.is_available():

return torch.device('cuda')

else:

return torch.device('cpu')

def to_device(data, device):

"""Move tensor(s) to chosen device"""

if isinstance(data, (list,tuple)):

return [to_device(x, device) for x in data]

return data.to(device, non_blocking=True)

class DeviceDataLoader():

"""Wrap a dataloader to move data to a device"""

def __init__(self, dl, device):

self.dl = dl

self.device = device

def __iter__(self):

"""Yield a batch of data after moving it to device"""

for b in self.dl:

yield to_device(b, self.device)

def __len__(self):

"""Number of batches"""

return len(self.dl)

"""Based on where you're running this notebook, your default device could be a CPU (`torch.device('cpu')`) or a GPU (`torch.device('cuda')`)"""

device = get_default_device()

device

"""We can now wrap our training and validation data loaders using `DeviceDataLoader` for automatically transferring batches of data to the GPU (if available)."""

train_dl = DeviceDataLoader(train_dl, device)

valid_dl = DeviceDataLoader(valid_dl, device)

"""## Model with Residual Blocks and Batch Normalization

One of the key changes to our CNN model this time is the addition of the resudial block, which adds the original input back to the output feature map obtained by passing the input through one or more convolutional layers.

Here is a very simple Residual block:

"""

class SimpleResidualBlock(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3, stride=1, padding=1)

self.relu1 = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3, stride=1, padding=1)

self.relu2 = nn.ReLU()

def forward(self, x):

out = self.conv1(x)

out = self.relu1(out)

out = self.conv2(out)

return self.relu2(out) + x # ReLU can be applied before or after adding the input

simple_resnet = to_device(SimpleResidualBlock(), device)

for images, labels in train_dl:

out = simple_resnet(images)

print(out.shape)

break

del simple_resnet, images, labels

torch.cuda.empty_cache()

"""This seeming small change produces a drastic improvement in the performance of the model. Also, after each convolutional layer, we'll add a batch normalization layer, which normalizes the outputs of the previous layer.

Go through the following blog posts to learn more:

* Why and how residual blocks work: https://towardsdatascience.com/residual-blocks-building-blocks-of-resnet-fd90ca15d6ec

* Batch normalization and dropout explained: https://towardsdatascience.com/batch-normalization-and-dropout-in-neural-networks-explained-with-pytorch-47d7a8459bcd

We will use the ResNet9 architecture, as described in [this blog series](https://www.myrtle.ai/2018/09/24/how_to_train_your_resnet/) :

"""

def accuracy(outputs, labels):

_, preds = torch.max(outputs, dim=1)

return torch.tensor(torch.sum(preds == labels).item() / len(preds))

class ImageClassificationBase(nn.Module):

def training_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

return loss

def validation_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

acc = accuracy(out, labels) # Calculate accuracy

return {'val_loss': loss.detach(), 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

epoch_loss = torch.stack(batch_losses).mean() # Combine losses

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean() # Combine accuracies

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch [{}], last_lr: {:.5f}, train_loss: {:.4f}, val_loss: {:.4f}, val_acc: {:.4f}".format(

epoch, result['lrs'][-1], result['train_loss'], result['val_loss'], result['val_acc']))

def conv_block(in_channels, out_channels, pool=False):

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)]

if pool: layers.append(nn.MaxPool2d(2))

return nn.Sequential(*layers)

class ResNet9(ImageClassificationBase):

def __init__(self, in_channels, num_classes):

super().__init__()

self.conv1 = conv_block(in_channels, 64)

self.conv2 = conv_block(64, 128, pool=True)

self.res1 = nn.Sequential(conv_block(128, 128), conv_block(128, 128))

self.conv3 = conv_block(128, 256, pool=True)

self.conv4 = conv_block(256, 512, pool=True)

self.res2 = nn.Sequential(conv_block(512, 512), conv_block(512, 512))

self.classifier = nn.Sequential(nn.MaxPool2d(4),

nn.Flatten(),

nn.Dropout(0.2),

nn.Linear(512, num_classes))

def forward(self, xb):

out = self.conv1(xb)

out = self.conv2(out)

out = self.res1(out) + out

out = self.conv3(out)

out = self.conv4(out)

out = self.res2(out) + out

out = self.classifier(out)

return out

model = to_device(ResNet9(3, 10), device)

model

"""## Training the model

Before we train the model, we're going to make a bunch of small but important improvements to our `fit` function:

* **Learning rate scheduling**: Instead of using a fixed learning rate, we will use a learning rate scheduler, which will change the learning rate after every batch of training. There are many strategies for varying the learning rate during training, and the one we'll use is called the **"One Cycle Learning Rate Policy"**, which involves starting with a low learning rate, gradually increasing it batch-by-batch to a high learning rate for about 30% of epochs, then gradually decreasing it to a very low value for the remaining epochs. Learn more: https://sgugger.github.io/the-1cycle-policy.html

* **Weight decay**: We also use weight decay, which is yet another regularization technique which prevents the weights from becoming too large by adding an additional term to the loss function.Learn more: https://towardsdatascience.com/this-thing-called-weight-decay-a7cd4bcfccab

* **Gradient clipping**: Apart from the layer weights and outputs, it also helpful to limit the values of gradients to a small range to prevent undesirable changes in parameters due to large gradient values. This simple yet effective technique is called gradient clipping. Learn more: https://towardsdatascience.com/what-is-gradient-clipping-b8e815cdfb48

Let's define a `fit_one_cycle` function to incorporate these changes. We'll also record the learning rate used for each batch.

"""

@torch.no_grad()

def evaluate(model, val_loader):

model.eval()

outputs = [model.validation_step(batch) for batch in val_loader]

return model.validation_epoch_end(outputs)

def get_lr(optimizer):

for param_group in optimizer.param_groups:

return param_group['lr']

def fit_one_cycle(epochs, max_lr, model, train_loader, val_loader,

weight_decay=0, grad_clip=None, opt_func=torch.optim.SGD):

torch.cuda.empty_cache()

history = []

# Set up cutom optimizer with weight decay

optimizer = opt_func(model.parameters(), max_lr, weight_decay=weight_decay)

# Set up one-cycle learning rate scheduler

sched = torch.optim.lr_scheduler.OneCycleLR(optimizer, max_lr, epochs=epochs,

steps_per_epoch=len(train_loader))

for epoch in range(epochs):

# Training Phase

model.train()

train_losses = []

lrs = []

for batch in train_loader:

loss = model.training_step(batch)

train_losses.append(loss)

loss.backward()

# Gradient clipping

if grad_clip:

nn.utils.clip_grad_value_(model.parameters(), grad_clip)

optimizer.step()

optimizer.zero_grad()

# Record & update learning rate

lrs.append(get_lr(optimizer))

sched.step()

# Validation phase

result = evaluate(model, val_loader)

result['train_loss'] = torch.stack(train_losses).mean().item()

result['lrs'] = lrs

model.epoch_end(epoch, result)

history.append(result)

return history

history = [evaluate(model, valid_dl)]

history

"""We're now ready to train our model. Instead of SGD (stochastic gradient descent), we'll use the Adam optimizer which uses techniques like momentum and adaptive learning rates for faster training. You can learn more about optimizers here: https://ruder.io/optimizing-gradient-descent/index.html"""

epochs = 8

max_lr = 0.01

grad_clip = 0.1

weight_decay = 1e-4

opt_func = torch.optim.Adam

# Commented out IPython magic to ensure Python compatibility.

# %%time

# history += fit_one_cycle(epochs, max_lr, model, train_dl, valid_dl,

# grad_clip=grad_clip,

# weight_decay=weight_decay,

# opt_func=opt_func)

train_time='4:24'

"""Our model trained to over **90% accuracy in under 5 minutes**! Try playing around with the data augmentations, network architecture & hyperparameters to achive the following results:

1. 94% accuracy in under 10 minutes (easy)

2. 90% accuracy in under 2.5 minutes (intermediate)

3. 94% accuracy in under 5 minutes (hard)

Let's plot the valdation set accuracies to study how the model improves over time.

"""

def plot_accuracies(history):

accuracies = [x['val_acc'] for x in history]

plt.plot(accuracies, '-x')

plt.xlabel('epoch')

plt.ylabel('accuracy')

plt.title('Accuracy vs. No. of epochs');

plot_accuracies(history)

"""We can also plot the training and validation losses to study the trend."""

def plot_losses(history):

train_losses = [x.get('train_loss') for x in history]

val_losses = [x['val_loss'] for x in history]

plt.plot(train_losses, '-bx')

plt.plot(val_losses, '-rx')

plt.xlabel('epoch')

plt.ylabel('loss')

plt.legend(['Training', 'Validation'])

plt.title('Loss vs. No. of epochs');

plot_losses(history)

"""It's clear from the trend that our model isn't overfitting to the training data just yet. Try removing batch normalization, data augmentation and residual layers one by one to study their effect on overfitting.

Finally, let's visualize how the learning rate changed over time, batch-by-batch over all the epochs.

"""

def plot_lrs(history):

lrs = np.concatenate([x.get('lrs', []) for x in history])

plt.plot(lrs)

plt.xlabel('Batch no.')

plt.ylabel('Learning rate')

plt.title('Learning Rate vs. Batch no.');

plot_lrs(history)

"""As expected, the learning rate starts at a low value, and gradually increases for 30% of the iterations to a maximum value of `0.01`, and then gradually decreases to a very small value.

## Testing with individual images

While we have been tracking the overall accuracy of a model so far, it's also a good idea to look at model's results on some sample images. Let's test out our model with some images from the predefined test dataset of 10000 images.

"""

def predict_image(img, model):

# Convert to a batch of 1

xb = to_device(img.unsqueeze(0), device)

# Get predictions from model

yb = model(xb)

# Pick index with highest probability

_, preds = torch.max(yb, dim=1)

# Retrieve the class label

return train_ds.classes[preds[0].item()]

img, label = valid_ds[0]

plt.imshow(img.permute(1, 2, 0).clamp(0, 1))

print('Label:', train_ds.classes[label], ', Predicted:', predict_image(img, model))

img, label = valid_ds[1002]

plt.imshow(img.permute(1, 2, 0))

print('Label:', valid_ds.classes[label], ', Predicted:', predict_image(img, model))

img, label = valid_ds[6153]

plt.imshow(img.permute(1, 2, 0))

print('Label:', train_ds.classes[label], ', Predicted:', predict_image(img, model))

"""Identifying where our model performs poorly can help us improve the model, by collecting more training data, increasing/decreasing the complexity of the model, and changing the hypeparameters.

## Save and Commit

Let's save the weights of the model, record the hyperparameters, and commit our experiment to Jovian. As you try different ideas, make sure to record every experiment so you can look back and analyze the results.

"""

torch.save(model.state_dict(), 'cifar10-resnet9.pth')

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# !pip install jovian --upgrade --quiet

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# import jovian

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.reset()

# jovian.log_hyperparams(arch='resnet9',

# epochs=epochs,

# lr=max_lr,

# scheduler='one-cycle',

# weight_decay=weight_decay,

# grad_clip=grad_clip,

# opt=opt_func.__name__)

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.log_metrics(val_loss=history[-1]['val_loss'],

# val_acc=history[-1]['val_acc'],

# train_loss=history[-1]['train_loss'],

# time=train_time)

# Commented out IPython magic to ensure Python compatibility.

# %%script echo Disabled

# jovian.commit(project=project_name, environment=None, outputs=['cifar10-resnet9.pth'])

"""## Summary and Further Reading

You are now ready to train state-of-the-art deep learning models from scratch. Try working on a project on your own by following these guidelines: https://jovian.ai/learn/deep-learning-with-pytorch-zero-to-gans/assignment/course-project

Here's a summary of the different techniques used in this tutorial to improve our model performance and reduce the training time:

* **Data normalization**: We normalized the image tensors by subtracting the mean and dividing by the standard deviation of pixels across each channel. Normalizing the data prevents the pixel values from any one channel from disproportionately affecting the losses and gradients. [Learn more](https://medium.com/@ml_kid/what-is-transform-and-transform-normalize-lesson-4-neural-networks-in-pytorch-ca97842336bd)

* **Data augmentation**: We applied random transformations while loading images from the training dataset. Specifically, we will pad each image by 4 pixels, and then take a random crop of size 32 x 32 pixels, and then flip the image horizontally with a 50% probability. [Learn more](https://www.analyticsvidhya.com/blog/2019/12/image-augmentation-deep-learning-pytorch/)

* **Residual connections**: One of the key changes to our CNN model was the addition of the resudial block, which adds the original input back to the output feature map obtained by passing the input through one or more convolutional layers. We used the ResNet9 architecture [Learn more](https://towardsdatascience.com/residual-blocks-building-blocks-of-resnet-fd90ca15d6ec).

* **Batch normalization**: After each convolutional layer, we added a batch normalization layer, which normalizes the outputs of the previous layer. This is somewhat similar to data normalization, except it's applied to the outputs of a layer, and the mean and standard deviation are learned parameters. [Learn more](https://towardsdatascience.com/batch-normalization-and-dropout-in-neural-networks-explained-with-pytorch-47d7a8459bcd)

* **Learning rate scheduling**: Instead of using a fixed learning rate, we will use a learning rate scheduler, which will change the learning rate after every batch of training. There are [many strategies](https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate) for varying the learning rate during training, and we used the "One Cycle Learning Rate Policy". [Learn more](https://sgugger.github.io/the-1cycle-policy.html)

* **Weight Decay**: We added weight decay to the optimizer, yet another regularization technique which prevents the weights from becoming too large by adding an additional term to the loss function. [Learn more](https://towardsdatascience.com/this-thing-called-weight-decay-a7cd4bcfccab)

* **Gradient clipping**: We also added gradient clippint, which helps limit the values of gradients to a small range to prevent undesirable changes in model parameters due to large gradient values during training. [Learn more.](https://towardsdatascience.com/what-is-gradient-clipping-b8e815cdfb48#63e0)

* **Adam optimizer**: Instead of SGD (stochastic gradient descent), we used the Adam optimizer which uses techniques like momentum and adaptive learning rates for faster training. There are many other optimizers to choose froma and experiment with. [Learn more.](https://ruder.io/optimizing-gradient-descent/index.html)

As an exercise, you should try applying each technique independently and see how much each one affects the performance and training time. As you try different experiments, you will start to cultivate the intuition for picking the right architectures, data augmentation & regularization techniques.

You are now ready to move on to the next tutorial in this series: [Generating Images using Generative Adversarial Networks](https://jovian.ai/aakashns/06b-anime-dcgan/)

"""

3. **Randomized data augmentations**: We will apply randomly chosen transformations while loading images from the training dataset. Specifically, we will pad each image by 4 pixels, and then take a random crop of size 32 x 32 pixels, and then flip the image horizontally with a 50% probability. Since the transformation will be applied randomly and dynamically each time a particular image is loaded, the model sees slightly different images in each epoch of training, which allows it generalize better.

"""

# Data transforms (normalization & data augmentation)

stats = ((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010))

train_tfms = tt.Compose([tt.RandomCrop(32, padding=4, padding_mode='reflect'),

tt.RandomHorizontalFlip(),

# tt.RandomRotate

# tt.RandomResizedCrop(256, scale=(0.5,0.9), ratio=(1, 1)),

# tt.ColorJitter(brightness=0.1, contrast=0.1, saturation=0.1, hue=0.1),

tt.ToTensor(),

tt.Normalize(*stats,inplace=True)])

valid_tfms = tt.Compose([tt.ToTensor(), tt.Normalize(*stats)])

# PyTorch datasets

train_ds = ImageFolder(data_dir+'/train', train_tfms)

valid_ds = ImageFolder(data_dir+'/test', valid_tfms)

"""Next, we can create data loaders for retrieving images in batches. We'll use a relatively large batch size of 500 to utlize a larger portion of the GPU RAM. You can try reducing the batch size & restarting the kernel if you face an "out of memory" error."""

batch_size = 400

# PyTorch data loaders

train_dl = DataLoader(train_ds, batch_size, shuffle=True, num_workers=3, pin_memory=True)

valid_dl = DataLoader(valid_ds, batch_size*2, num_workers=3, pin_memory=True)

"""Let's take a look at some sample images from the training dataloader. To display the images, we'll need to _denormalize_ the pixels values to bring them back into the range `(0,1)`."""

def denormalize(images, means, stds):

means = torch.tensor(means).reshape(1, 3, 1, 1)

stds = torch.tensor(stds).reshape(1, 3, 1, 1)

return images * stds + means

def show_batch(dl):

for images, labels in dl:

fig, ax = plt.subplots(figsize=(12, 12))

ax.set_xticks([]); ax.set_yticks([])

denorm_images = denormalize(images, *stats)

ax.imshow(make_grid(denorm_images[:64], nrow=8).permute(1, 2, 0).clamp(0,1))

break

show_batch(train_dl)

"""The colors seem out of place because of the normalization. Note that normalization is also applied during inference. If you look closely, you can see the cropping and reflection padding in some of the images. Horizontal flip is a bit difficult to detect from visual inspection.

## Using a GPU

To seamlessly use a GPU, if one is available, we define a couple of helper functions (`get_default_device` & `to_device`) and a helper class `DeviceDataLoader` to move our model & data to the GPU as required. These are described in more detail in a [previous tutorial](https://jovian.ml/aakashns/04-feedforward-nn#C21).

"""

def get_default_device():

"""Pick GPU if available, else CPU"""

if torch.cuda.is_available():

return torch.device('cuda')

else:

return torch.device('cpu')

def to_device(data, device):

"""Move tensor(s) to chosen device"""

if isinstance(data, (list,tuple)):

return [to_device(x, device) for x in data]

return data.to(device, non_blocking=True)

class DeviceDataLoader():

"""Wrap a dataloader to move data to a device"""

def __init__(self, dl, device):

self.dl = dl

self.device = device

def __iter__(self):

"""Yield a batch of data after moving it to device"""

for b in self.dl:

yield to_device(b, self.device)

def __len__(self):

"""Number of batches"""

return len(self.dl)

"""Based on where you're running this notebook, your default device could be a CPU (`torch.device('cpu')`) or a GPU (`torch.device('cuda')`)"""

device = get_default_device()

device

"""We can now wrap our training and validation data loaders using `DeviceDataLoader` for automatically transferring batches of data to the GPU (if available)."""

train_dl = DeviceDataLoader(train_dl, device)

valid_dl = DeviceDataLoader(valid_dl, device)

"""## Model with Residual Blocks and Batch Normalization

One of the key changes to our CNN model this time is the addition of the resudial block, which adds the original input back to the output feature map obtained by passing the input through one or more convolutional layers.

Here is a very simple Residual block:

"""

class SimpleResidualBlock(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3, stride=1, padding=1)

self.relu1 = nn.ReLU()

self.conv2 = nn.Conv2d(in_channels=3, out_channels=3, kernel_size=3, stride=1, padding=1)

self.relu2 = nn.ReLU()

def forward(self, x):

out = self.conv1(x)

out = self.relu1(out)

out = self.conv2(out)

return self.relu2(out) + x # ReLU can be applied before or after adding the input

simple_resnet = to_device(SimpleResidualBlock(), device)

for images, labels in train_dl:

out = simple_resnet(images)

print(out.shape)

break

del simple_resnet, images, labels

torch.cuda.empty_cache()

"""This seeming small change produces a drastic improvement in the performance of the model. Also, after each convolutional layer, we'll add a batch normalization layer, which normalizes the outputs of the previous layer.

Go through the following blog posts to learn more:

* Why and how residual blocks work: https://towardsdatascience.com/residual-blocks-building-blocks-of-resnet-fd90ca15d6ec

* Batch normalization and dropout explained: https://towardsdatascience.com/batch-normalization-and-dropout-in-neural-networks-explained-with-pytorch-47d7a8459bcd

We will use the ResNet9 architecture, as described in [this blog series](https://www.myrtle.ai/2018/09/24/how_to_train_your_resnet/) :

"""

def accuracy(outputs, labels):

_, preds = torch.max(outputs, dim=1)

return torch.tensor(torch.sum(preds == labels).item() / len(preds))

class ImageClassificationBase(nn.Module):

def training_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

return loss

def validation_step(self, batch):

images, labels = batch

out = self(images) # Generate predictions

loss = F.cross_entropy(out, labels) # Calculate loss

acc = accuracy(out, labels) # Calculate accuracy

return {'val_loss': loss.detach(), 'val_acc': acc}

def validation_epoch_end(self, outputs):

batch_losses = [x['val_loss'] for x in outputs]

epoch_loss = torch.stack(batch_losses).mean() # Combine losses

batch_accs = [x['val_acc'] for x in outputs]

epoch_acc = torch.stack(batch_accs).mean() # Combine accuracies

return {'val_loss': epoch_loss.item(), 'val_acc': epoch_acc.item()}

def epoch_end(self, epoch, result):

print("Epoch [{}], last_lr: {:.5f}, train_loss: {:.4f}, val_loss: {:.4f}, val_acc: {:.4f}".format(

epoch, result['lrs'][-1], result['train_loss'], result['val_loss'], result['val_acc']))

def conv_block(in_channels, out_channels, pool=False):

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True)]

if pool: layers.append(nn.MaxPool2d(2))

return nn.Sequential(*layers)

class ResNet9(ImageClassificationBase):

def __init__(self, in_channels, num_classes):

super().__init__()

self.conv1 = conv_block(in_channels, 64)

self.conv2 = conv_block(64, 128, pool=True)

self.res1 = nn.Sequential(conv_block(128, 128), conv_block(128, 128))

self.conv3 = conv_block(128, 256, pool=True)

self.conv4 = conv_block(256, 512, pool=True)

self.res2 = nn.Sequential(conv_block(512, 512), conv_block(512, 512))

self.classifier = nn.Sequential(nn.MaxPool2d(4),

nn.Flatten(),

nn.Dropout(0.2),